Continuous Test & Evaluation for AI-Powered Fire Control

Request a Meeting

Challenge

When developing an AI agent for a critical system, like an Integrated Air and Missile Defense (IAMD) fire control, developers must continuously verify that the model behaves correctly and that new updates do not introduce regressions. Manually testing the AI’s performance across all possible engagement scenarios is impossible.

Our Solution

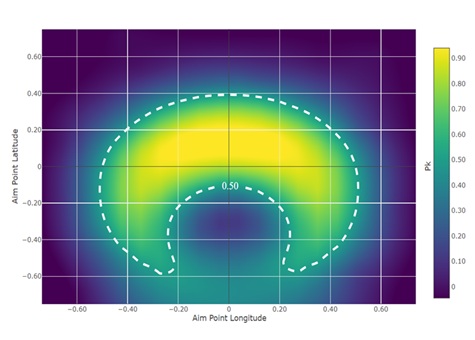

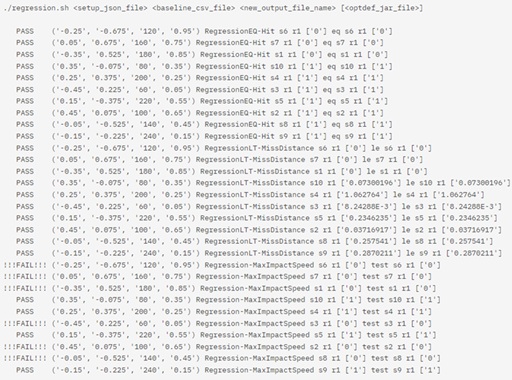

We embed the AI agent within a simulation model and use our OptDef software to create a fully automated T&E pipeline. OptDef’s adaptive sampling efficiently maps the AI’s performance across the entire battlespace (e.g., finding the Pk=0.5 contour) with minimal simulation runs. For regression testing, OptDef automatically executes a batch of known scenarios and compares the AI’s behavior against stored, verified results.

Impact

This automated process, integrated directly into a CI/CD pipeline, provides continuous validation of AI model behavior on every code commit. It dramatically accelerates the development and testing cycle by automatically catching regressions, ensuring developers have documented confidence that their AI models are battle-ready.

More Case Studies

MDA: AI-Powered Battle Management for Integrated Air & Missile Defense (IAMD)

The integration of new Directed Energy (DE) weapons with traditional kinetic interceptors introduces a new layer of complexity to the battle management decision-making process for commanders in dynamic environments.

Stress Testing to Harden Simulation Models and Uncover "Brittle" Behavior

Simulation models can exhibit unexpected or “brittle” behavior at the edges of their performance envelope, creating vulnerabilities that are difficult to find with standard testing. Proactively identifying these failure points is critical for building trust in the model.